XGBoost is an implementation of Gradient Boosted decision trees. This library was written in C++. It is a type of Software library that was designed basically to improve speed and model performance. It has recently been dominating in applied machine learning. XGBoost models majorly dominate in many Kaggle Competitions.

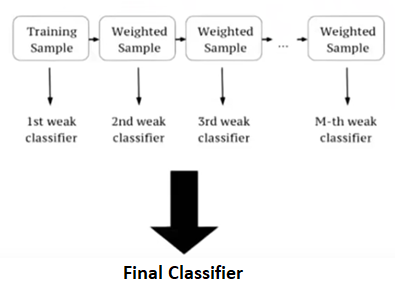

In this algorithm, decision trees are created in sequential form. Weights play an important role in XGBoost. Weights are assigned to all the independent variables which are then fed into the decision tree which predicts results. Weight of variables predicted wrong by the tree is increased and these the variables are then fed to the second decision tree. These individual classifiers/predictors then ensemble to give a strong and more precise model. It can work on regression, classification, ranking, and user-defined prediction problems.

XGBoost Features

The library is laser-focused on computational speed and model performance, as such, there are few frills.

Model Features

Three main forms of gradient boosting are supported:

- Gradient Boosting

- Stochastic Gradient Boosting

- Regularized Gradient Boosting

System Features

- For use of a range of computing environments this library provides-

- Parallelization of tree construction

- Distributed Computing for training very large models

- Cache Optimization of data structures and algorithm

Steps to Install

Windows

XGBoost uses Git submodules to manage dependencies. So when you clone the repo, remember to specify –recursive option:

git clone --recursive https://github.com/dmlc/xgboost

For windows users who use github tools, you can open the git shell and type the following command:

git submodule init git submodule update

OSX(Mac)

First, obtain gcc-8 with Homebrew (https://brew.sh/) to enable multi-threading (i.e. using multiple CPU threads for training). The default Apple Clang compiler does not support OpenMP, so using the default compiler would have disabled multi-threading.

brew install gcc@8

Then install XGBoost with pip:

pip3 install xgboost

You might need to run the command with –user flag if you run into permission errors.

Code: Python code for XGB Classifier

Output

Accuracy will be about 0.8645

Best resource for Online free Education

Best resource for Online free Education