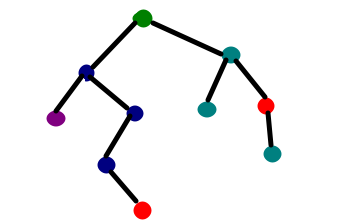

Decision Tree is one of the most powerful and popular algorithm. Decision-tree algorithm falls under the category of supervised learning algorithms. It works for both continuous as well as categorical output variables.

Prerequisites: Decision Tree, DecisionTreeClassifier, sklearn, numpy, pandas

Decision Tree is one of the most powerful and popular algorithm. Decision-tree algorithm falls under the category of supervised learning algorithms. It works for both continuous as well as categorical output variables.

In this Video, We are going to implement a Decision tree algorithm on the Balance Scale Weight & Distance Database presented on the UCI.

Data-set Description :

Title : Balance Scale Weight & Distance Database Number of Instances : 625 (49 balanced, 288 left, 288 right) Number of Attributes : 4 (numeric) + class name = 5 Attribute Information:

- Class Name (Target variable): 3

- L [balance scale tip to the left]

- B [balance scale be balanced]

- R [balance scale tip to the right]

- Left-Weight: 5 (1, 2, 3, 4, 5)

- Left-Distance: 5 (1, 2, 3, 4, 5)

- Right-Weight: 5 (1, 2, 3, 4, 5)

- Right-Distance: 5 (1, 2, 3, 4, 5) Missing Attribute Values: None Class Distribution:

You can find more details of the dataset here.

- 46.08 percent are L

- 07.84 percent are B

- 46.08 percent are R

Best resource for Online free Education

Best resource for Online free Education